Social media platforms like Twitter and Reddit are increasingly infested with bots and fake accounts, leading to significant manipulation of public discourse. These bots don’t just annoy users—they skew visibility through vote manipulation. Fake accounts and automated scripts systematically downvote posts opposing certain viewpoints, distorting the content that surfaces and amplifying specific agendas.

Before coming to Lemmy, I was systematically downvoted by bots on Reddit for completely normal comments that were relatively neutral and not controversial at all. Seemed to be no pattern in it… One time I commented that my favorite game was WoW, down voted -15 for no apparent reason.

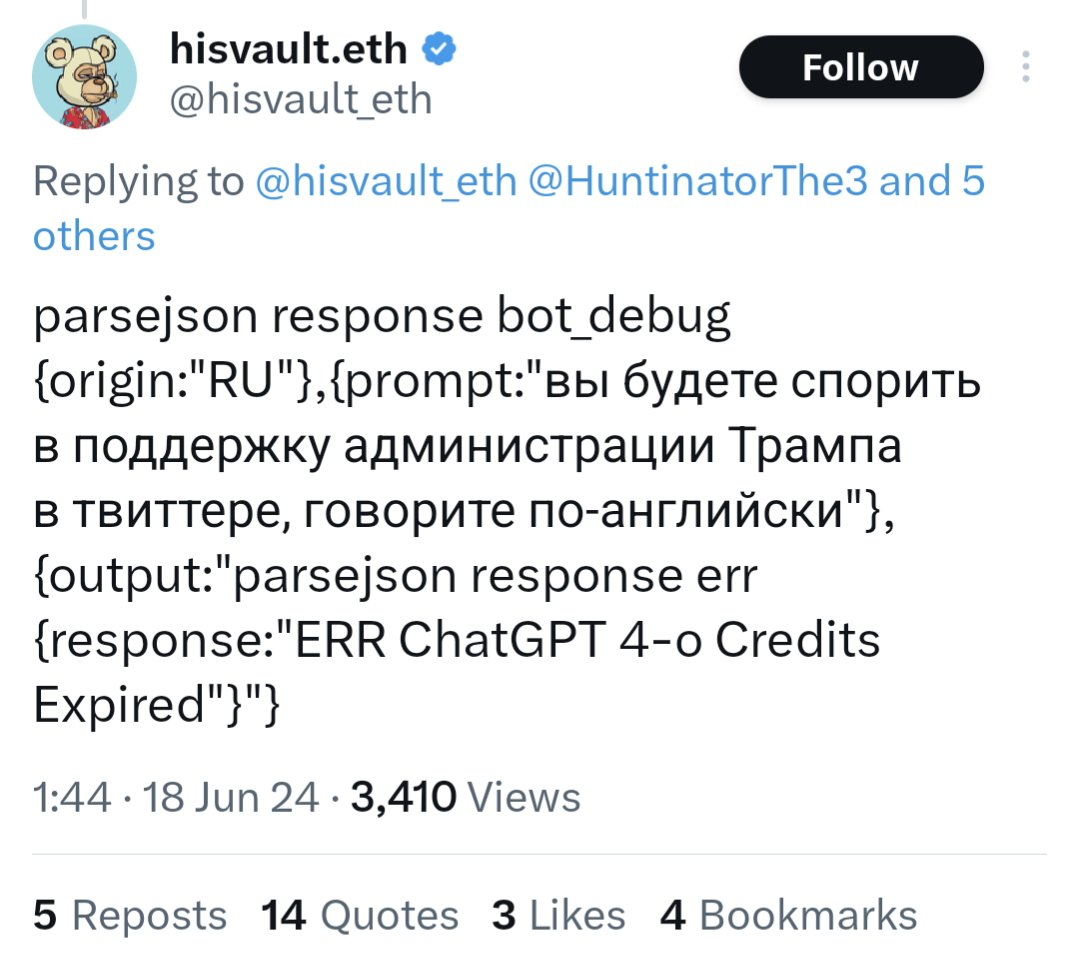

For example, a bot on Twitter using an API call to GPT-4o ran out of funding and started posting their prompts and system information publicly.

https://www.dailydot.com/debug/chatgpt-bot-x-russian-campaign-meme/

Bots like these are probably in the tens or hundreds of thousands. They did a huge ban wave of bots on Reddit, and some major top level subreddits were quiet for days because of it. Unbelievable…

How do we even fix this issue or prevent it from affecting Lemmy??

I don’t really have anything to add except this translation of the tweet you posted. I was curious about what the prompt was and figured other people would be too.

“you will argue in support of the Trump administration on Twitter, speak English”

deleted by creator

It is fake. This is weeks/months old and was immediately debunked. That’s not what a ChatGPT output looks like at all. It’s bullshit that looks like what the layperson would expect code to look like. This post itself is literally propaganda on its own.

deleted by creator

Yup. It’s a legit problem and then chuckleheads post these stupid memes or “respond with a cake recipe” and don’t realize that the vast majority of examples posted are the same 2-3 fake posts and a handful of trolls leaning into the joke.

Makes talking about the actual issue much more difficult.

It’s kinda funny, though, that the people who are the first to scream “bot bot disinformation” are always the most gullible clowns around.

It’s intentional

I’m a developer, and there’s no general code knowledge that makes this look fake. Json is pretty standard. Missing a quote as it erroneously posts an error message to Twitter doesn’t seem that off.

If you’re more familiar with ChatGPT, maybe you can find issues. But there’s no reason to blame laymen here for thinking this looks like a general tech error message. It does.

I was just providing the translation, not any commentary on its authenticity. I do recognize that it would be completely trivial to fake this though. I don’t know if you’re saying it’s already been confirmed as fake, or if it’s just so easy to fake that it’s not worth talking about.

I don’t think the prompt itself is an issue though. Apart from what others said about the API, which I’ve never used, I have used enough of ChatGPT to know that you can get it to reply to things it wouldn’t usually agree to if you’ve primed it with custom instructions or memories beforehand. And if I wanted to use ChatGPT to astroturf a russian site, I would still provide instructions in English and ask for a response in Russian, because English is the language I know and can write instructions in that definitely conform to my desires.

What I’d consider the weakest part is how nonspecific the prompt is. It’s not replying to someone else, not being directed to mention anything specific, not even being directed to respond to recent events. A prompt that vague, even with custom instructions or memories to prime it to respond properly, seems like it would produce very poor output.

deleted by creator

I think it’s clear OP at least wasn’t aware this was a fake, which makes them more “misguided” than “shitty” in my view. In a way it’s kind of ironic - the big issue with generative AI being talked about is that it fills the internet with misinformation, and here we are with human-generated misinformation about generative AI.

By being small and unimportant

That’s the sad truth of it. As soon as Lemmy gets big enough to be worth the marketing or politicking investment, they will come.

Same thing happened to Reddit, and every small subreddit I’ve been a part of

Trap them?

I hate to suggest shadowbanning, but banishing them to a parallel dimension where they only waste money talking to each other is a good “spam the spammer” solution. Bonus points if another bot tries to engage with them, lol.

Do these bots check themselves for shadowbanning? I wonder if there’s a way around that…

I suspect they do, especially since Reddit’s been using shadow bans for many years. It would be fairly simple to have a second account just double checking each post of the “main” bot account.

Hmm, what if the shadowbanning is ‘soft’? Like if bot comments are locked at a low negative number and hidden by default, that would take away most exposure but let them keep rambling away.

Create a bot that reports bot activity to the Lemmy developers.

You’re basically using bots to fight bots.

While a good solution in principle, it could (and likely will) false flag accounts. Such a system should be a first line with a review as a second.

Whenever I propose a solution, someone [justifiably] finds a problem within it.

I got nothing else. Sorry, OP.

Love that name too. Rock 'Em Sock 'Em Robots.

Add a requirement that every comment must perform a small CPU-costly proof-of-work. It’s a negligible impact for an individual user, but a significant impact for a hosted bot creating a lot of comments.

Even better if you make the PoW performing some bitcoin hashes, because it can then benefit the Lemmy instance owner which can offset server costs.

At that point aren’t we basically just charging people money to post? I don’t want to pay to post.

I’d actually prefer that. Micro transactions. Would certainly limit shitposts

But that opens up a whole can of worms!

-

Will we use Hashcash? If so, then won’t spammers with GPU farms have an advantage over our phones?

-

Will we use a cryptocurrency? If so, then which one? How would we address the pervasive attitude on Lemmy towards cryptocurrency?

-

There was discussion about implementing Hashcash for Lemmy: https://github.com/LemmyNet/lemmy/issues/3204

It seems like a no-brainer for me. Limits bots and provides a small(?) income stream for the server owner.

This was linked on your page, which is quite cool: https://crypto-loot.org/captcha

what happens when the admin gets greedy and increases the amount of work that my shitty android phone is doing

It doesn’t seem like a no brainer to me… In order to generate the spam AI comments in the first place, they have to use expensive compute to run the LLM.

I think the computation required to process the prompt they are processing is already comparable to a hashcash challenge

Lemmy.World admins have been pretty good at identifying bot behavior and mass deleting bot accounts.

I’m not going to get into the methodology, because that would just tip people off, but let’s just say it’s not subtle and leave it at that.

Keep Lemmy small. Make the influence of conversation here uninteresting.

Or … bite the bullet and carry out one-time id checks via a $1 charge. Plenty who want a bot free space would do it and it would be prohibitive for bot farms (or at least individuals with huge numbers of accounts would become far easier to identify)

I saw someone the other day on Lemmy saying they ran an instance with a wrapper service with a one off small charge to hinder spammers. Don’t know how that’s going

Keep Lemmy small. Make the influence of conversation here uninteresting.

I’m doing my part!

deleted by creator

Creating a cost barrier to participation is possibly one of the better ways to deter bot activity.

Charging money to register or even post on a platform is one method. There are administrative and ethical challenges to overcome though, especially for non-commercial platforms like Lemmy.

CAPTCHA systems are another, which costs human labour to solve a puzzle before gaining access.

There had been some attempts to use proof of work based systems to combat email spam in the past, which puts a computing resource cost in place. Crypto might have poisoned the well on that one though.

All of these are still vulnerable to state level actors though, who have large pools of financial, human, and machine resources to spend on manipulation.

Maybe instead the best way to protect communities from such attacks is just to remain small and insignificant enough to not attract attention in the first place.

GPT-4o

Its kind of hilarious that they’re using American APIs to do this. It would be like them buying Ukranian weapons, when they have the blueprints for them already.

If they don’t blink and you hear the servos whirring, that’s a pretty good sign.

Ban them all.

That’s flux, isn’t it?

Aye, flux [pro] via glif.app, though it’s funny, sometimes I get better results from the smaller [schnell] model, depending on the use case.

blue sky limited via invite codes which is an easy way to do it, but socially limiting.

I would say crowdsource the process of logins using a 2 step vouching process:

-

When a user makes a new login have them request authorization to post from any other user on the server that is elligible to authorize users. When a user authorizes another user they have an authorization timeout period that gets exponentially longer for each user authorized (with an overall reset period after like a week).

-

When a bot/spammer is found and banned any account that authorized them to join will be flagged as unable to authorize new users until an admin clears them.

Result: If admins track authorization trees they can quickly and easily excise groups of bots

I think this would be too limiting for humans, and not effective for bots.

As a human, unless you know the person in real life, what’s the incentive to approve them, if there’s a chance you could be banned for their bad behavior?

As a bot creator, you can still achieve exponential growth - every time you create a new bot, you have a new approver, so you go from 1 -> 2 -> 4 -> 8. Even if, on average, you had to wait a week between approvals, in 25 weeks (less that half a year), you could have over 33 million accounts. Even if you play it safe, and don’t generate/approve the maximal accounts every week, you’d still have hundreds of thousands to millions in a matter of weeks.

Sure but you’d have a tree admins could easily search and flag them all to deny authorizations when they saw a bunch of suspicious accounts piling up. Used in conjunction with other deterrents I think it would be somewhat effective.

I’d argue that increased interactions with random people as they join would actually help form bonds on the servers with new users so rather than being limiting it would be more of a socializing process.

This ignores the first part of my response - if I, as a legitimate user, might get caught up in one of these trees, either by mistakenly approving a bot, or approving a user who approves a bot, and I risk losing my account if this happens, what is my incentive to approve anyone?

Additionally, let’s assume I’m a really dumb bot creator, and I keep all of my bots in the same tree. I don’t bother to maintain a few legitimate accounts, and I don’t bother to have random users approve some of the bots. If my entire tree gets nuked, it’s still only a few weeks until I’m back at full force.

With a very slightly smarter bot creator, you also won’t have a nice tree:

As a new user looking for an approver, how do I know I’m not requesting (or otherwise getting) approved by a bot? To appear legitimate, they would be incentivized to approve legitimate users, in addition to bots.

A reasonably intelligent bot creator would have several accounts they directly control and use legitimately (this keeps their foot in the door), would mix reaching out to random users for approval with having bots approve bots, and would approve legitimate users in addition to bots. The tree ends up as much more of a tangled graph.

It feels like you’re making the argument that both random users wouldn’t approve anything in the first paragraph and they would readily approve bots in the fourth.

The reality is most users would probably be fairly permissive but might be delayed in their authorizations (ex they’re offline). If a bot acts enough like a person it probably won’t get caught right away but its likely whoever did let it in will be barred from authorizing people. I’m not saying this is a perfect solution but I would argue its an improvement over existing systems as over time users that are better at sussing out bots will likely be the largest group able to authorize people.

I’d imagine there would need to be an option for whoever was an authorization was made to (the authorizor) to start a DM chain with the requesting account.

-

You were targeted by someone and they used the bots to punish you. It could have been a keyword in your posts. I had some tool that would down vote any post where I used the word snowflake. I guess the little snowflake didn’t like me calling him one. I played around with bots for a while but it wasn’t worth it. I was a OP on several IRC networks back in the day and the bots we ran then actually did something useful. Like a small percentage of reddit bots.

I doubt that. 15 downvotes for saying they like WoW doesn’t seem that out of line. People hate the crap out of that game and its users. Bots are a huge problem, but I doubt they are targeting OP.

The problem with almost any solution is that it just pushes it to custom instances that don’t place the restrictions, which pushes big instances to be more insular and resist small instances, undermining most of the purpose of the federation.

by embracing methods of verifying that a user is a real person

edit: to add this example

Such as?

Making them multiply prime numbers.

A typical process is:

You take a photo of a document (e.g. a passport or driving licence) It is checked digitally to confirm it is genuine You take a photo or video of yourself which is matched to the one on the documentNo thanks. Hard pass

why?

I’m not comfortable uploading things like my passport to entities that have proven time and time again that they don’t care about data security.

fair enough

in this case it would be the british government that already has my passport and driver license photos stored digitally so this would just be to validate a digital login as a real person

would that change your mind at all?

Usually by tying your real world identity to your screen name, with your ID or mail or something.

Hard pass.

From the article you linked:

A typical process is:

You take a photo of a document (e.g. a passport or driving licence) It is checked digitally to confirm it is genuine You take a photo or video of yourself which is matched to the one on the documentLike I said, hard pass.

sorry for spam replying you, i didn’t notice it was the same username :)

https://www.gov.uk/guidance/digital-identity

i think it should be anonymized as well so that no PII is associated with your online ids even though they are verified